This paper introduces Continuous Thought Machines (CTM), a revolutionary neural architecture that treats temporal dynamics as a fundamental computational primitive rather than an abstraction to be eliminated. Unlike standard neural networks that process information in discrete, static layers, CTMs allow "thought" to unfold over an internal time dimension, using neural synchronization patterns as the core representation for taking actions.

The results are remarkable: CTMs achieve 6× generalization beyond training distribution on maze navigation (trained on 39×39, solves 99×99), demonstrate near-perfect accuracy on cumulative parity where LSTMs fail, show better-than-human calibration on image classification, and exhibit emergent behaviors like adaptive compute allocation and interpretable "thinking" trajectories—all without explicitly designing for these capabilities.

The key insight is that by allowing neurons to maintain individual temporal histories and learn per-neuron dynamics through Neuron-Level Models (NLMs), CTMs bridge computational efficiency with biological plausibility, opening new research directions for systems exhibiting more human-like intelligence.

Imagine your brain as an orchestra. In a standard neural network, every musician plays their note simultaneously in one instant—there's no melody, just a single chord. In a Continuous Thought Machine, the orchestra plays over time: violins start, then cellos join in, patterns emerge between instruments, and the final piece emerges from how they synchronize together over time. The CTM watches which neurons "fire together" across time and uses that synchronization pattern—not just the final notes—to make decisions. This is closer to how real brains work, where timing and rhythm of neural activity carry meaningful information.

Standard neural networks treat computation as instantaneous: input goes in, activations propagate through layers, output comes out. Time exists only for processing sequential data. But this fundamentally differs from biological neural systems, where when a neuron fires matters as much as whether it fires.

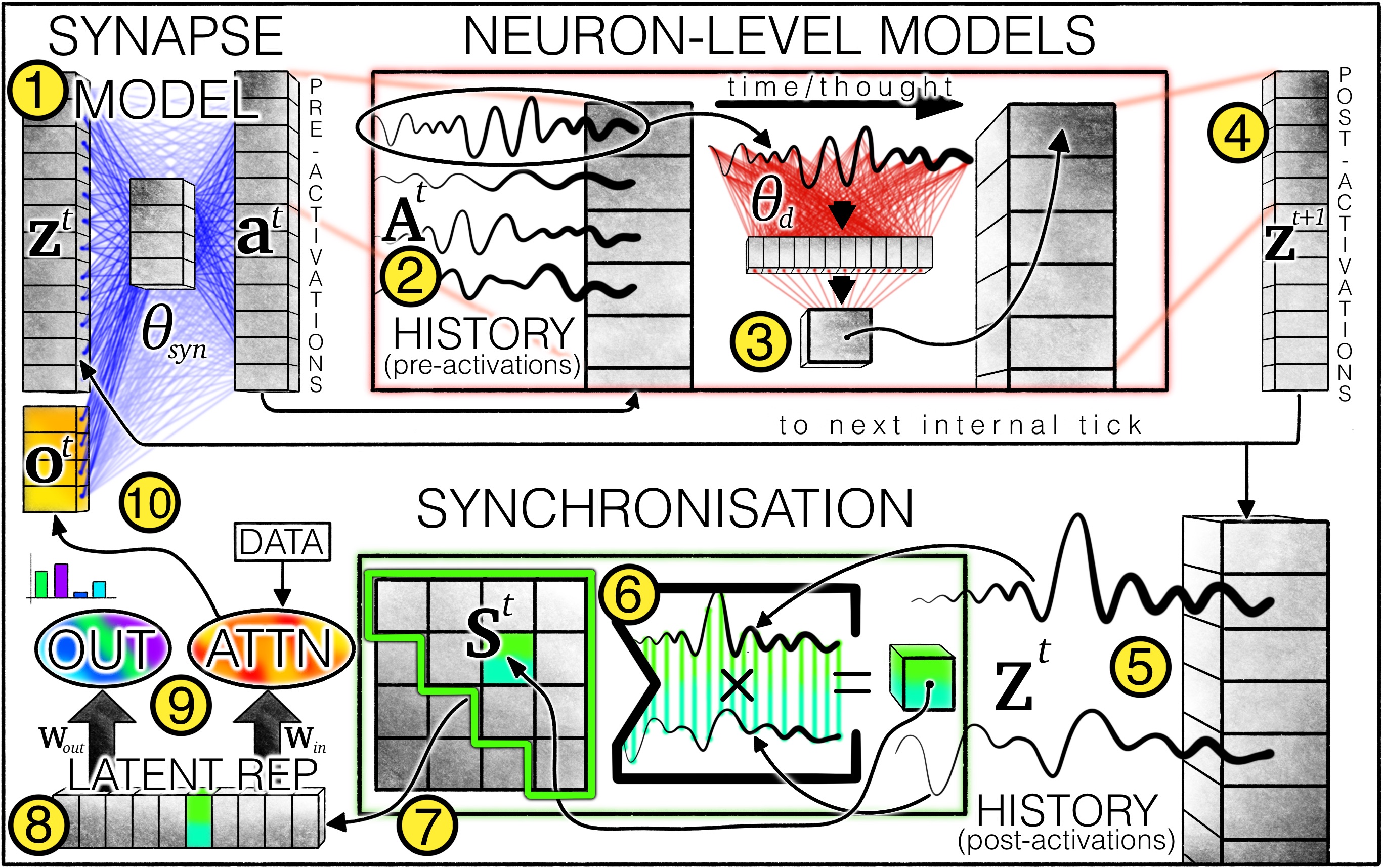

CTM introduces an internal recurrence timeline t ∈ {1,...,T} that is completely independent from any sequential data structure. Even for a single static image, the CTM processes it over multiple internal "ticks," allowing iterative refinement of representations. This internal time enables the model to "think" about inputs rather than just react to them.

Each neuron receives its own private MLP that processes its historical pre-activations. Unlike standard recurrent networks where all neurons share the same recurrence weights, NLMs allow each neuron to develop its own unique temporal dynamics. This is analogous to how biological neurons have different time constants and response properties.

Rather than using raw activations, CTM computes a synchronization matrix St = Zt · (Zt)⊺ that captures how pairs of neurons correlate over time. This matrix—representing which neurons "fire together"—is the fundamental representation used for outputs and attention. This mirrors biological findings that neural synchronization carries meaningful information.

The CTM architecture draws from multiple neuroscience principles:

A critical innovation in CTM is how it handles training across internal time steps. Rather than forcing the network to produce correct outputs at a fixed time, CTM dynamically selects the optimal moments for evaluation.

The CTM training objective combines two key time points:

This loss formulation creates emergent curriculum learning. Early in training, easy examples achieve low loss quickly (low t1), while hard examples need more internal steps. As training progresses, the model learns to use more internal computation for difficult inputs and less for easy ones. The certainty term (t2) ensures the model develops confidence in its decisions.

The first major experiment applies CTM to ImageNet classification, revealing interpretable "thinking" processes and exceptional calibration properties.

Unlike black-box models, CTM's attention maps reveal how the model processes images over time. The attention starts broad, covering the entire image, then progressively focuses on discriminative regions. This mirrors human visual attention: we first take in the whole scene, then focus on relevant details.

On CIFAR-10, CTM achieves calibration superior to human performance. When the model says it's 80% confident, it's correct 80% of the time. This is crucial for real-world deployment where knowing when you don't know is as important as knowing the answer.

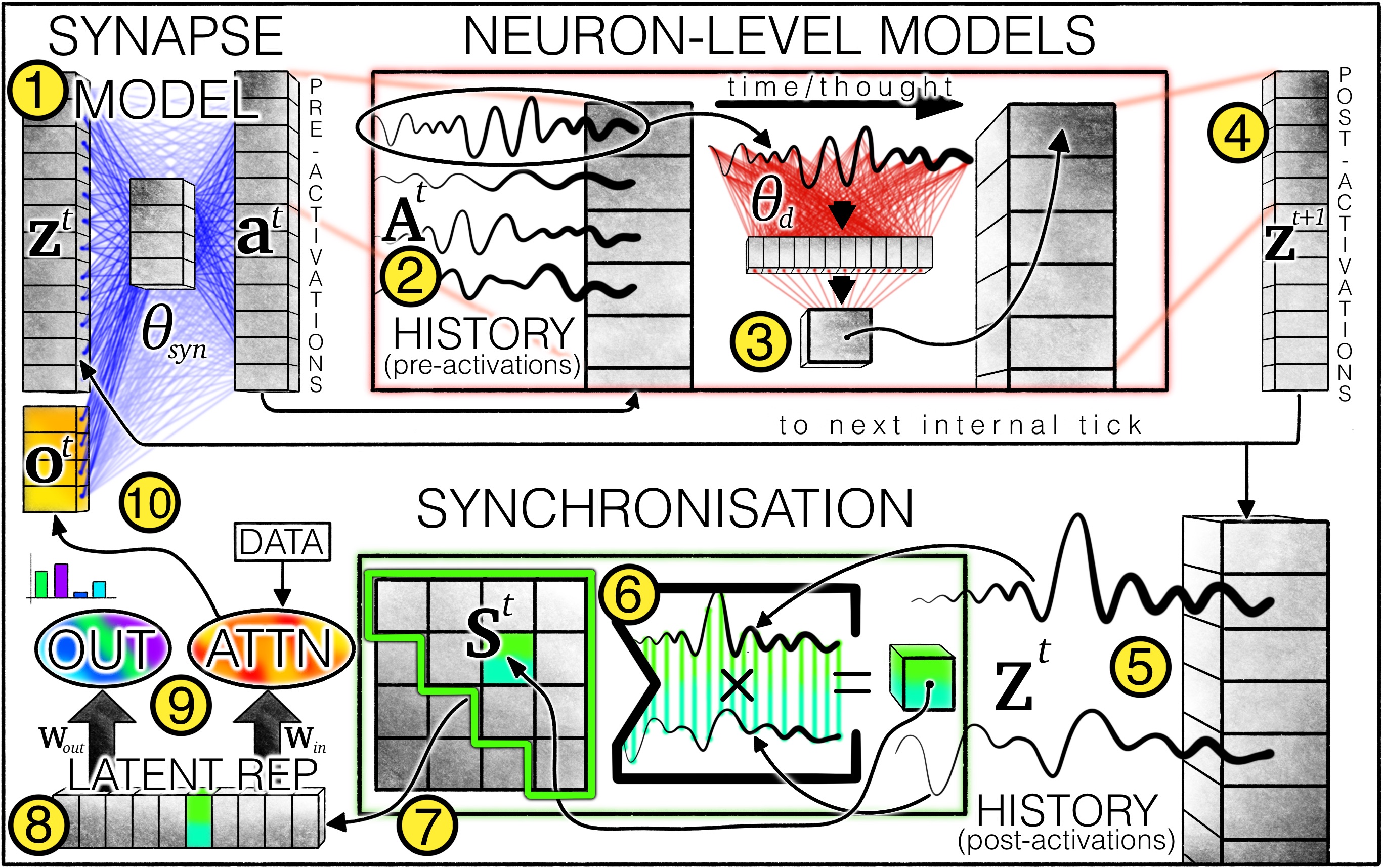

When visualizing CTM neuron activations over time, rich dynamics emerge spontaneously:

Shows periodic activity, traveling waves, and multi-scale temporal structure—all without any explicit design for periodicity. Different neurons develop different characteristic patterns.

Shows relatively monotonic evolution toward a fixed point. Less temporal structure and diversity across neurons.

The maze navigation task demonstrates CTM's ability to build internal world models and generalize far beyond training distribution.

CTMs trained on 39×39 mazes (path lengths ~100) successfully solve 99×99 mazes—a 6× increase in problem complexity. This isn't just slight extrapolation; it's solving fundamentally larger problems than ever seen in training.

Analysis of the attention patterns reveals that CTM builds an internal world model of the maze. The attention literally traces the solution path from start to finish, showing the model has learned the underlying structure of maze navigation rather than memorizing patterns.

Unlike Transformers that require explicit positional encodings, CTM solves mazes without any position information. The temporal dynamics themselves provide the necessary structure for understanding spatial relationships. This suggests the internal time dimension serves as a universal computational scaffold.

The cumulative parity task tests whether models can perform sequential algorithmic reasoning: given a sequence of bits, output the running XOR at each position.

| Model | Internal Ticks | Final Accuracy | Notes |

|---|---|---|---|

| LSTM | N/A | ~20% | Struggles regardless of capacity |

| CTM | 10 | ~40% | Insufficient thinking time |

| CTM | 25 | ~70% | Improving with more ticks |

| CTM | 75+ | ~100% | Near-perfect performance |

Analysis of attention patterns reveals something striking: the CTM's attention moves backward through the sequence. Instead of processing left-to-right like typical sequential models, CTM appears to "plan" by looking ahead to upcoming bits. This emergent bidirectional processing wasn't designed—it emerged from the architecture's freedom to allocate attention over internal time.

The Q&A MNIST task tests memory: the model sees a sequence of digit images, then must answer questions like "What was the 3rd digit?" or "Was any digit greater than 5?"

The generalization grids reveal how well models trained on shorter sequences perform on longer ones:

Strong generalization pattern with high accuracy maintained across sequence length variations. The synchronization-based memory provides robust recall.

Performance degrades more rapidly with increased sequence length. Traditional recurrent memory struggles with out-of-distribution lengths.

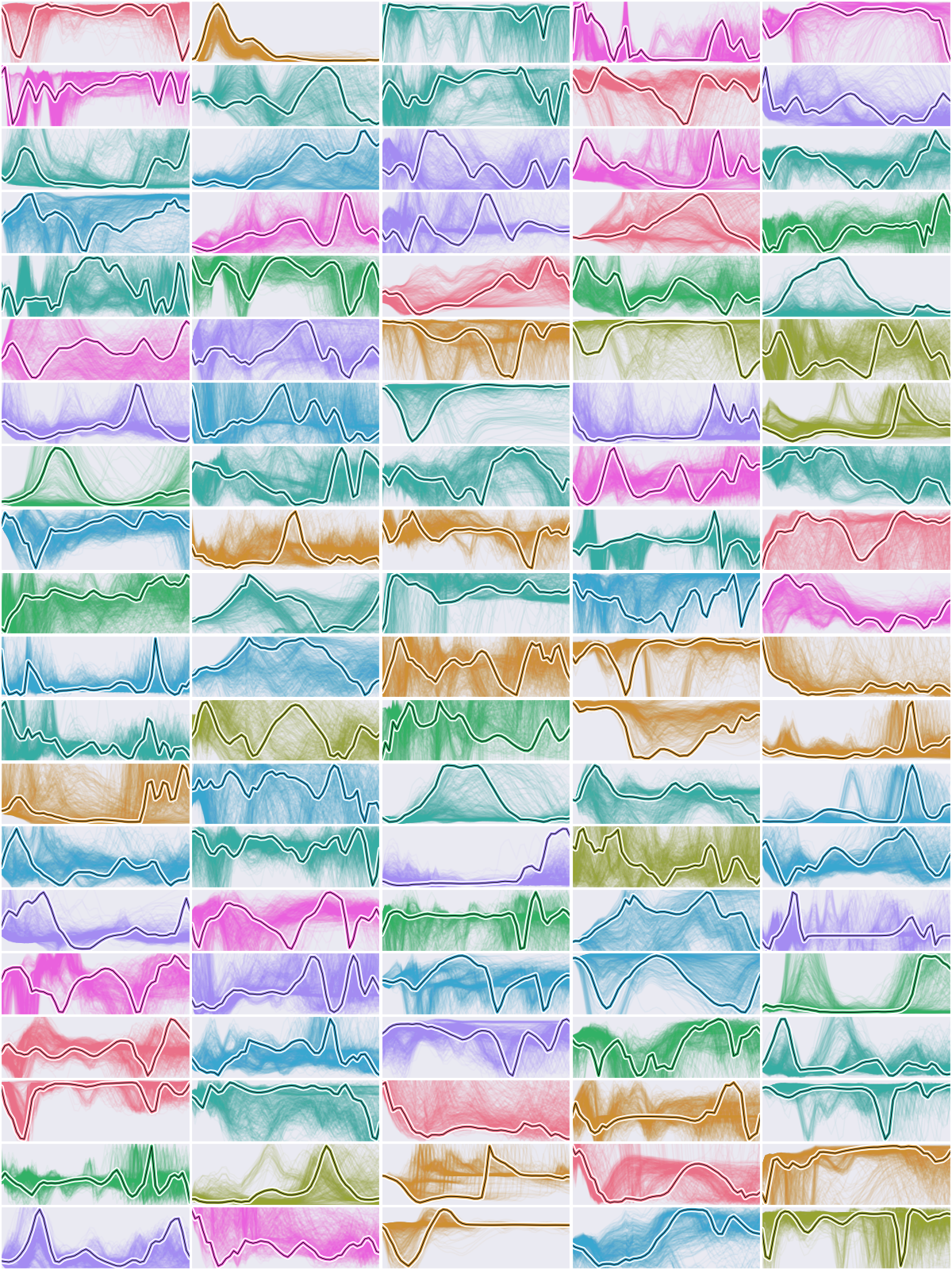

The sorting task reveals CTM's ability to allocate computation adaptively based on problem difficulty.

Analysis reveals that CTM's "wait time" before outputting each sorted element correlates with the gap between consecutive sorted values. When two numbers are close together (harder to order), the model takes more internal ticks to decide. When numbers are far apart (easy), it decides quickly. This difficulty-aware computation wasn't trained—it emerged naturally.

Analysis of neuron similarity reveals why width helps: wider models develop more diverse neuron behaviors. With more neurons, each can specialize for different temporal patterns, creating a richer representational space.

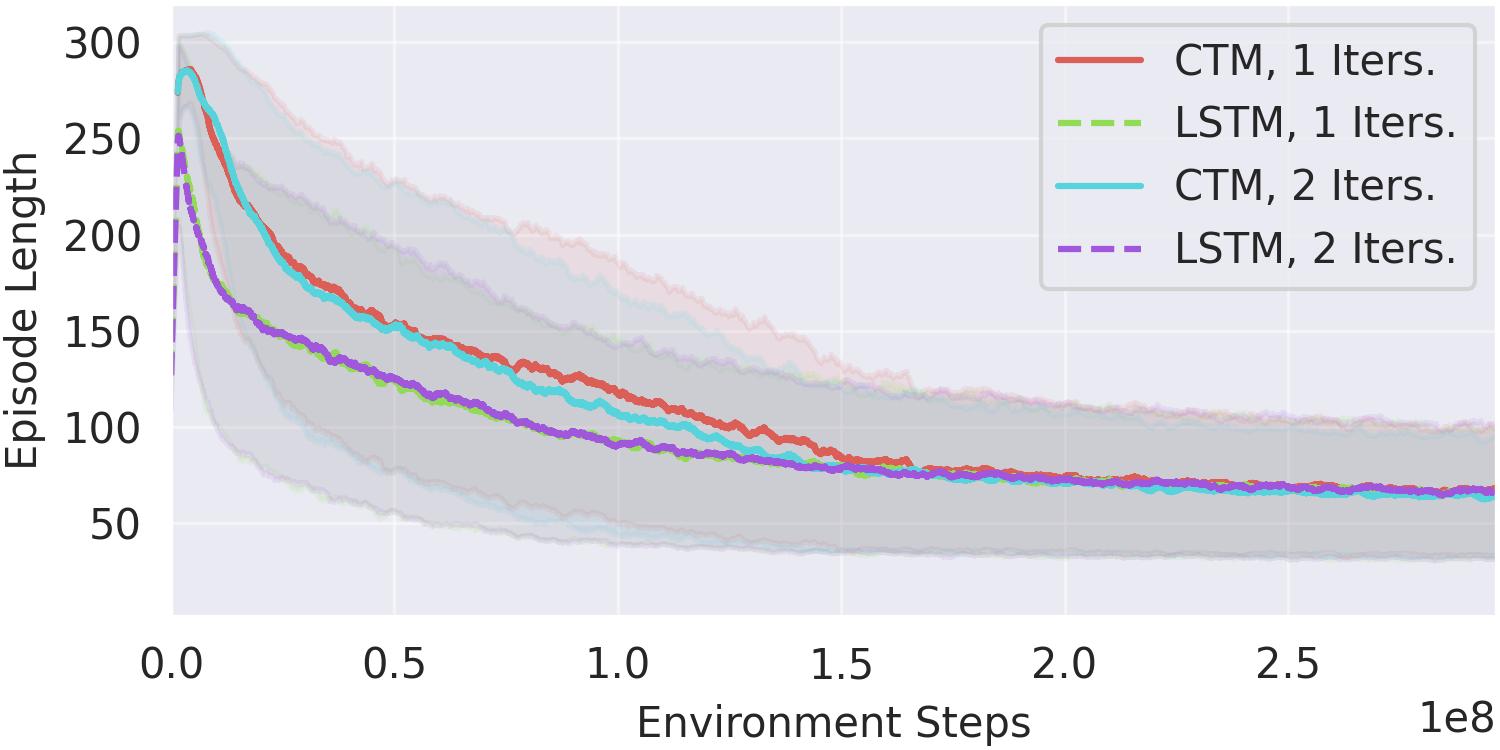

CTM extends to reinforcement learning, maintaining continuous activation histories across environment steps.

The key insight is that CTM's internal time can persist across environment steps, allowing the agent to maintain "trains of thought" that span multiple actions. This is more biologically plausible than resetting hidden states at each step.

The success of CTM suggests that temporal dynamics are not merely a biological quirk to be abstracted away, but a fundamental computational resource. By allowing information to evolve over internal time, CTM achieves capabilities that elude architectures treating computation as instantaneous:

| Feature | Standard NN | LSTM/RNN | Transformer | CTM |

|---|---|---|---|---|

| Internal computation time | Fixed (depth) | Tied to sequence | Fixed (layers) | Adaptive |

| Per-neuron dynamics | No | No (shared weights) | No | Yes (NLMs) |

| Synchronization representation | No | No | Partial (attention) | Yes |

| Biological plausibility | Low | Medium | Low | High |

| Interpretability | Low | Medium | Medium | High |

Continuous Thought Machines represent a fundamental rethinking of neural network computation. By embracing temporal dynamics rather than abstracting them away, CTM achieves:

The key insight is that neural synchronization—how neurons fire together over time—provides a rich representational space that standard architectures miss. Rather than computing instant answers, CTMs think about their inputs, developing answers through temporal evolution.

For practitioners: CTM suggests that adding internal recurrence with per-neuron dynamics can unlock capabilities beyond what static architectures achieve. The success of synchronization-based representations hints at fundamentally new approaches to representation learning.

For researchers: This work opens a new paradigm where time is a first-class computational citizen. The emergent behaviors—planning, calibration, adaptive compute—suggest we're just scratching the surface of what temporal neural architectures can achieve.

Sakana AI: "Continuous Thought Machines"

Full interactive paper with visualizations and demos.

PDF Version

Technical report with full mathematical details.

GitHub Repository

Open-source implementation and experiment code.

arXiv: 2505.05522

Academic preprint version.